Hi, I'm Min (Mia) Shi.

A

Welcome to my GitHub Page! I’m an enthusiastic Data Scientist and Data Engineer with a passion for turning raw data into meaningful insights and solving complex problems. I specialize in building scalable ELT pipelines, designing user-friendly data applications, and creating AI-powered tools. My work includes optimizing workflows with AWS, developing real-time machine learning and deep learning models for tasks like speech-to-text and speaker identification, and empowering teams with interactive dashboards and well-documented processes. Always eager to learn and explore, I’m dedicated to pushing the boundaries of what data can achieve.

About

I’m a Data Scientist and Data Engineer with a unique journey from a quantitative social science Ph.D. background into the dynamic world of data.

My passion for uncovering insights and solving complex problems led me to fully embrace the technical challenges of data science, machine learning, and engineering. I strive not only to build models but also to equip myself with the skills needed to take full ownership of projects—designing pipelines, automating workflows, and delivering impactful, end-to-end solutions.

At The Sunwater Institute, I designed and optimized data pipelines to improve efficiency and deliver reliable, real-time insights. This included building scalable ELT pipelines, automating workflows with AWS, and developing user-friendly data applications that streamlined processes and improved accessibility.

Coming from a social science background, my journey into data has been fueled by a constant desire to learn and grow. I’m driven to explore my full potential, combining everything I’ve learned with a vision for making data accessible, actionable, and transformative.

Let’s connect and discuss the exciting possibilities in the ever-evolving world of data!

- Programming Languages: Python, SQL, Java, Javascript, HTML, CSS, R, SAS, Stata

- Databases: SQL Server, MySQL, PostgreSQL, MongoDB, Amazon S3/RDS

- Big Data: Hadoop, Sqoop, Hive, Impala, Pig, Spark

- Automation & CI/CD: AWS Glue, Lambda, Boto3, Streamlit, GitHub, Alteryx

- Visualization Tools: Tableau, Power BI, Jupyter

- AI/ML: PyTorch, TensorFlow, Deep Learning, Machine Learning, NLP, Speech Processing, Speech-to-Text, Speaker Identification

- Certificate: AWS Cloud Practitioner, Applied Machine Learning, Google Data Analytics

- Languages: English, Chinese, Japanese

Experience

- Designed and deployed production-ready ML/AI pipelines on AWS (S3, Glue, Lambda, EC2) processing 100+ GB daily, improving efficiency by 40%.

- Built and integrated predictive and prescriptive ML models (logistic regression, gradient boosting, neural networks) into business workflows, achieving 80–90% accuracy across multimodal datasets (audio, video, text).

- Developed and optimized LLM-based NLP pipelines (summarization, Q&A, entity extraction), improving data quality and insight generation for stakeholders.

- Delivered interactive dashboards (Dash/Streamlit) to translate model outputs into actionable business insights for non-technical partners.

- Developed predictive NLP pipelines (speech-to-text + entity extraction) achieving >90% transcript accuracy for congressional hearing data.

- Designed end-to-end ML/ETL workflows in Python, SQL, and PySpark to automate ingestion, transformation, and modeling for large-scale education and policy datasets.

- Implemented automated data validation rules (schema checks, missing values, distribution drift) and anomaly detection on multiple datasets, reducing pipeline failures and transcription errors by 75%.

- Collaborated with cross-functional teams to deliver production-ready predictive analytics and dashboards supporting policy decision-making.

- Built and evaluated 20+ predictive/statistical models (logistic regression, GLMs, time-series, NLP) for international political economy, global health and education projects.

- Applied NLP techniques to free-text survey data, contributing to peer-reviewed publications and evidence-based policy recommendations.

- Managed 10+ concurrent projects, leading a team of five research assistants through data collection, cleaning, modeling, and presentation.

- Summary: Led the creation of an AI-driven chatbot, enhancing customer engagement through advanced NLP techniques.

- Employed NLP and MySQL for analyzing and querying an extensive database containing over 10 million entries.

- Achieved 25% improvement in response efficiency and provided 99% accurate predictions using XGBoost model.

- Contributed to a 15% rise in user engagement, increasing customer satisfaction and bolstering company’s image.

- Tools & Skills: Python, SQL, NLP, ML, UI Design, Leadership, Communication

- Summary: Served as a Data Analyst Intern responsible for data management, data visualization, and business analysis.

- Improved the efficiency of data extraction by 40% through data optimization in MySQL.

- Employed Microsoft Visio to visualize intricate network structures and aided in product comprehension.

- Produced Business Intelligence (BI) reports, offering insights based on user structures and competitor analysis.

- Tools: MySQL, Microsoft Visio, Microsoft Office

Projects

A six-month solo project developing a social media platform’s backend using HBase, MySQL, and Redis with Django framework in Python.

- Tools: Python · Django · AWS · HBase · MySQL · Redis

- Maximizing query efficiency by storing objects with HBase, MySQL & Amazon S3 based on query complexity.

- Addressing N+1 slow query issues by implementing Redis caching and denormalization.

- Integrating Celery and RabbitMQ to establish asynchronous workers with varying priority levels.

- Implementing a push model for distributing news feeds to followers efficiently.

- Optimizing memory and resource allocation using recursive small batches of asynchronous tasks.

Comprehensive Financial Performance Analytics of the Top Four US Airlines

- Tools: Data Mining · Business Model Analytics

- Analyzed financial data from a 20-year dataset of over 10,000 rows, covering net income, revenue, and expenses across the US airline industry. This deep dive provided insights into long-term financial trends and shifts.

- Conducted financial performance analytics for the top 4 airlines, identifying key turning points related to major events, alliances, and partnerships over the period.

- Assessed operational trends and competitive positioning of each airline, deriving specific business model recommendations based on a two-decade comparison with competitors.

Leveraging Deep Learning CNNs for Disease Diagnosis in Apple Orchards

- Tools: Deep Learning · CNN · Transfer Learning

- Utilized transfer learning on CNNs with 5,590 images in 12 categories, enhancing disease identification accuracy.

- Conducted image transformation, including rotation, flipping, zooming, and noise injections to augment data.

- Fine-tuned ConvNext DL CNN models and achieve 86.8% accuracy, securing a Top 3 ranking in the competition.

Leveraging Web Scraping for Business Prediction via NLP & ML Approaches

- Tools: Web Scraping · Natural Language Processing · Machine Learning Models

- Created an automated web scraping tool to extract more than 7,000 WSJ news articles using specified keywords.

- Analyzed WSJ articles employing Count Vectorizer, Tfidf Vectorizer, and n-grams Count Vectorizer.

- Implemented Naïve Bayes and Random Forest models; achieved a notable ROC AUC value and an increase in S&P 500 stock index prediction accuracy by 12%.

- Demonstrated consistent accuracy across various vectorizers, suggesting the potential use of NLP in forecasting stock price changes based on WSJ news articles related to U.S. trade.

Geospatial Truck Fleet Big Data Analytics and Visualization

- Tools: Hadoop ecosystem · Tableau · R

- Used big data Hadoop ecosystem to process geospatial data ingestion, transformation, and database creation.

- Performed data exploration and visualization in Tableau by connecting to Hadoop ecosystem server.

- Modeled how factors affect the truck driver risk factor, drew a final report and proposed suggestions on how to lower the probability of large trucks accidents.

Extensive Analysis of Table Spreads Industry

- Tools: SAS · Tableau · Statistical Regression Analysis · Time Series Analysis

- Researched over 1.3 million records to identify key metrics contributing to the sales of top brands

- Evaluated strengths and weakness of Conagra Brands compared to competitors in each sub-category

- Built Machine Learning and Time Series models to predict future directions for Conagra Brands

Payroll Management System Database Design via MySQL

- Tools: MySQL

- Led a group of five in conducting business requirements analysis and designing a payroll management database with MySQL consisting of 13 tables.

- Increased efficiency in extract-transform-load and payroll database management by 100% via stored functions, procedures, and triggers.

Goldman Sachs Global Business Analytics and Prediction via Python and Alteryx

- Tools: Alteryx · Python · Business Analytics · Time Series Analysis

- Researched and generated datasets of US and worldwide inflation during pre-pandemic and post-pandemic periods via Python from raw datasets

- Conducted data cleaning and preprocessing, built time series ARIMA and ETS models to forecast trends in Consumer Price Index (CPI) and Producer Price Index (PPI) via Alteryx

- Presented key trends and findings on inflation and consumer prices and the further impact on Goldman Sachs, provided insights and recommendations on global operation strategies based on the analysis

Analysis of the Effect of COVID-19 on US Trade and US Firms

- Tools: Deep Learning · Machine Learning · Statistical Regression Analysis · Communication

- Synthesized data and created fixed-effect regression models to identify correlations and causal mechanisms

- Developed and Implemented machine learning and deep learning models to conduct counterfactual analysis

- Presented findings at the 2023 Applied Data Science international conference

Modeling U.S.-China Trade War’s effect on US Multinational Corporations

- Tools: Python · R · SQL · Stata · Time Series Analysis

- Generated and managed a new database using PostgreSQL and performed data analysis in Python

- Built time series GARCH models in Stata to examine the effects of U.S.-China trade conflicts on US firms

- Presented the findings at the 2022 International Society for Data Science and Analytics Conference

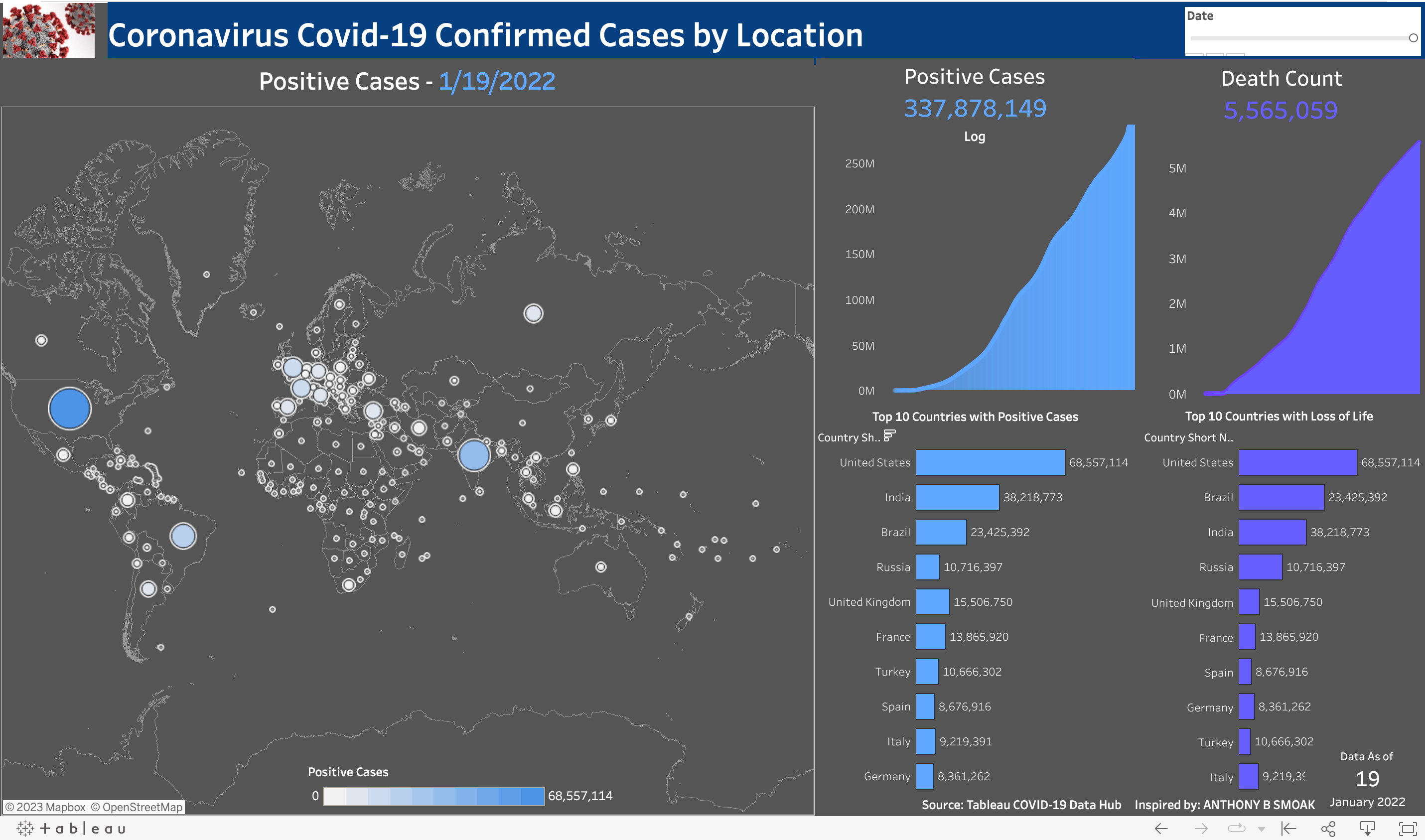

COVID-19 Worldwide Cases Synchronous Dashboard using Tableau

U.S. Multinational Corporation Trade Database with China

- Tools: SQL · Databases · MySQL

- The database aims to serve people interested in US-China trade war and its effect on US-China trade volumes, and the impact on US multinational corporations (MNCs) which depend on global value chains (GVC) heavily.

- The database provides mainly two types of data. The first type is the macroscopic data. Specifically, this database provides US-China monthly trade data by commodity, the volume and percentage of products under tariff data between US and China. It also contains the data about US annual trade with all countries and the basic development indicator information of these countries, including GDP, population, tariff rate in general, and tariff rate for manufactured products. The second type of data is the microcosmic data about MNCs, including S&P 500 company list with detailed information, such as stock symbol, location, sector, industry, etc., S&P 500 company stock price time-series data, fortune 500 company list and their annual revenues data, fortune 500 company stock price time-series data, top 20 companies list based on their level of sale in China, and top 20 companies list based on their share of sale in China.

- People could utilize this dataset to explore how U.S.-China trade relations change in the 21st century, the connection between U.S.-China trade and their tariff change, the differences in the tendencies of US trade with different companies, how U.S.-China trade relations affect U.S. multinational corporations (MNCs).

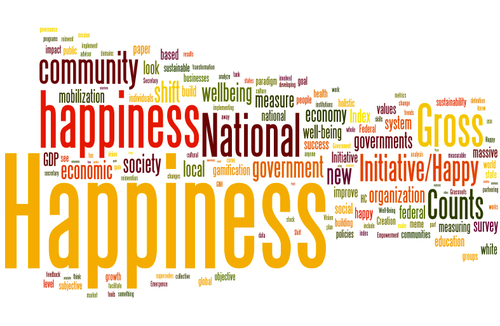

What Factors Affect People’s National Happiness Score?

- Tools: R · R Markdown

- The World Happiness Report is the most professional annual report about countries’ happiness index and has attracted attention from policymakers from multiple areas. The values of happiness scores are based on respondent ratings of their own lives. And each report also includes six basic factors covering financial generation, social back, life anticipation, flexibility, nonattendance of debasement, and liberality.

- This paper explores the effect of other potential factors, including regime type, demographic factors, and COVID-19 severity. The statistical findings indicate that the more democratic one country is, and the larger the population size, its citizens tend to feel happier. Besides, the population density, population net change, and population density net change are negatively correlated to one country’s happiness score.

- Two interesting findings through data visualization are: Firstly, the relationship between democracy and one country’s happiness index follows a U shape rather than a positive linear line, different from the statistical results and no support to H1. Secondly, the population size and population density are negatively related to one country’s happiness index, supporting H2a and H2b. The results indicate that statistical regression results are not reliable in all cases, and data visualization is necessary to examine and interpret the statistical regression results more accurately.

Skills

Programming Languages

Python

Python

SQL

SQL

Java

Java

HTML

HTML

CSS

CSS

JavaScript

JavaScript

R

R

Cloud Platforms

AWS Web Services

AWS Web Services

AWS Glue

AWS Glue

AWS Lambda

AWS Lambda

AWS Boto3

AWS Boto3

Amazon RDS

Amazon RDS

AWS S3

AWS S3

AWS Transcribe

AWS Transcribe

AWS EC2

AWS EC2

AWS ECR

AWS ECR

AWS & OpenSearch

AWS & OpenSearch

Google CLoud

Google CLoud

Web Frameworks

Django

Django

Data Application Frameworks

Streamlit

Streamlit

R Shiny

R Shiny

Database & Big Data

SQL Server

SQL Server

MySQL

MySQL

PostgreSQL

PostgreSQL

Mango DB

Mango DB

Hadoop

Hadoop

Sqoop

Sqoop

Hive

Hive

Impala

Impala

Pig

Pig

Spark

Spark

Tools

Tableau

Tableau

SAS

SAS

Stata

Stata

Alteryx

Alteryx

Appian

Appian

ACCELQ

ACCELQ

UiPath

UiPath

Jupyter Notebook

Jupyter Notebook

Excel Functions

Excel Functions

Libraries

NumPy

NumPy

Pandas

Pandas

Matplotlib

Matplotlib

Sklearn

Sklearn

Scipy

Scipy

NLTK

NLTK

Statsmodels

Statsmodels

PyTorch

PyTorch

Selenium

Selenium

AWS Boto 3

AWS Boto 3

Certificates

Graduate Certificate in Applied Machine Learning at UTD

Graduate Certificate in Applied Machine Learning at UTD

Google Data Analytics Certificate

Google Data Analytics Certificate

AWS Certified Cloud Practitioner Certificate

AWS Certified Cloud Practitioner Certificate

Alteryx Designer Core Certificate

Alteryx Designer Core Certificate

Appian Certified Associate Developer

Appian Certified Associate Developer

ACCELQ Automation Engineer Certificate

ACCELQ Automation Engineer Certificate

Languages

English

English

Chinese

Chinese

Japanese

Japanese

Education

The University of Texas at Dallas

Dallas, USA

Degree: Ph.D. in Political Science – Quantitative Statistical Modeling Focused

GPA: 3.95/4.0

Degree: Master of Science in Business Analytics (STEM) – Data Science & Data Engineer Track

GPA: 4.0/4.0

Degree: Master of Science in Social Data Analytics and Research

GPA: 3.95/4.0

Degree: Master of Art in Political Science

GPA: 3.95/4.0

Jinan, China

Degree: Master of Law in International Politics

GPA: 88.78/100

Degree: Bachlor of Arts in Japanese

GPA: 87.37/100